1) The Bull Market in Grifters.

Joe Weisenthal’s latest newsletter was fantastic and in it he makes an interesting point:

We live in an age of extreme grift. This is obvious. There are people out there publicly organizing pump and dump schemes. Right now, someone is trying to sell you a dollar’s worth of cryptocurrency for five dollars. Celebrities scam their fans by selling them garbage. It’s all over the place. But that alone isn’t particularly interesting. Grift has been around since day one, and there might even be a cyclical element to it. The economist John Kenneth Galbraith famously talked about the bezzle — the bad faith business practices that produce an illusory wealth effect, which only gets exposed after the bust. The interesting part to me is the total lack of shame. Nobody bothers to try and hide it. And nobody really judges it either. It’s all just right out in the open. The world is awash in “economic nihilism.” Jim Chanos calls it the “golden age of fraud.”

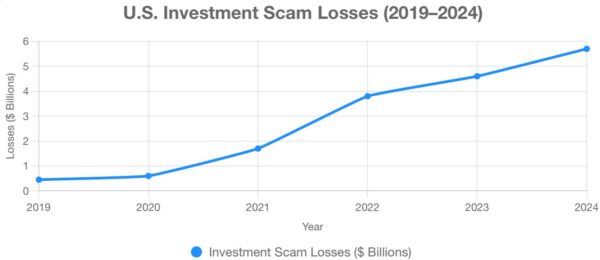

Here’s some data in chart form from the FTC’s Sentinel report on this. Scams have indeed surged in recent years. What gives?

I suspect a lot of this is due to humongous growth in the crypto space, but if I had to pick an underlying fundamental cause I’d argue it’s also occurring for the same reason that the internet is such a cesspool of bad behavior – we just don’t care about other people because there’s no interpersonal relationship in more and more of our business dealings.

I grew up in the darkest, nastiest cesspools of the internet. As Bane says in Dark Knight Rises, “Ah you think darkness is your ally? You merely adopted the dark. I was born in it, molded by it.” As a Gen-Xer who was basically raised in AOL chat rooms I feel like my entire life has involved watching people misbehave on the internet and trying to understand why. Why do grown men and women go on the internet and treat people a way they would never ever treat someone in real life? Because there’s no accountability. There’s no interpersonal relationship. There’s no integrity. If you call me an a-hole on Twitter using the handle fatfaceman346 (sorry, I took that already) there’s no downside. No consequences. No accountability to be had. If you do that in real life then I probably give you a stern talking to, make you feel really, really bad about yourself and then try to explain reserve accounting to you to cleanse you of your sins. See, big consequences there. I kid, kind of.

But this is the same reason scams are becoming so much more pronounced. We’re all distancing ourselves from one another. As our relationships with our phones and AI improve our relationships with real people decline. We don’t care anymore. The end justifies the means, and all that. It is actually a sad reality of modern technology. And I am someone who generally believes that technology is fantastic. I wish it was going to get better, but I suspect it won’t. The next generation will probably date robots and spend more time with their personal robot than anyone else. It will be nice in lots of ways. And it will be terrible in other ways. And the robots will probably run the scams so the people don’t have to. And the robots can scam 24/7 so watch your back.

Anyhow, fun times.

2) Apple Isn’t Interested in Robots.

Apple Corp has been strangely behind the curve on the AI boom. And a recent paper of theirs might shed some light on it all. I know you won’t read it so here’s a summary from GPT, of course:

“[the authors] observe that Large Reasoning Models exhibit a sharp accuracy collapse beyond certain complexity thresholds, and display a paradoxical trend: reasoning effort initially rises with complexity but then diminishes even when token budgets allow further reflection. By comparing LRMs with standard LLMs under equal compute conditions, they identify three distinct performance regimes: in simple tasks, standard models outperform LRMs; in moderately complex tasks, LRMs benefit from the extra reasoning; and in highly complex scenarios, both collapse entirely. Notably, LRMs struggle with precise computation—they don’t reliably employ explicit algorithms and often show inconsistent reasoning across tasks—prompting deeper investigation into the nature of “thinking” in current models.”

I am obviously a big believer in AI. But Apple’s skepticism makes sense to me. At least in my experience. For example, I’d argue that something like ChatGPT is already a super intelligence. It is an expert at understanding virtually everything. But it’s not better than the best experts in those fields. That might change but as of now it understands 80% of 100% of things. Which is way more than most of us. And that’s enough to be an extraordinarily useful technology. But it won’t replace the experts. In fact, what it does in my view is turn the experts into super experts. AI is like having a super assistant who you’re constantly getting feedback from and bouncing ideas off of. They’re helping you process ideas and workflow in a much more efficient manner. In this way it replaces the assistants, but not the experts because, like a good assistant, it gets 80% of the work done, but can’t quality control the most important 20%.

The Apple view is essentially consistent with this. And it’s interesting because Apple’s expertise isn’t just software. Apple builds beautifully seamless hardware that integrates with lots of different software options. They don’t need to build the God AI because the God AI is going to be another tool on the hardware device that Apple inevitably builds to crush all the other AI hardwares. As I’ve discussed before, I don’t think AI ends with a God AI anyhow. There isn’t going to be a Google of the AI world. This is going to be the most polytheistic technology we ever experience because it will be self replicating in a way none of us have ever experienced. In other words, when you’re dating the robot that Apple builds it will run on its own decentralized AI software and Apple will look very smart for not having sunk $100s of billions into AI data centers in an arms race for the God AI.

Or maybe Apple has botched this whole AI thing. I have no idea. Ha.

3) INVESTCON 5.

The latest letter from Howard Marks is truly one of the best of his career. And that’s saying quite a lot considering he’s on the Mount Rushmore of investment writing. The first half is a masterclass in understanding value vs price. And the latter part is about how Howard relates all of this to the current market environment. He creates a great analogy for investment risk compared to the Pentagon’s Defense Readiness Conditions, ranging from DEFCON 5 to DEFCON 1. Marks says we’re currently at INVESTCON 5:

6. Stop buying

5. Reduce aggressive holdings and increase defensive holdings

4. Sell off the remaining aggressive holdings

3. Trim defensive holdings as well

2. Eliminate all holdings

1. Go short

I really like this. I’m not a big advocate of super active asset allocation, but activity is part of any sensible rebalancing strategy and choosing the best rebalancing strategy is difficult. For the behaviorally sensitive it might require more activity. For the behaviorally robust it might require little to no rebalancing. But that is largely contingent on time horizons, as you can probably guess. So I’d broadly agree with Howard here, except with one caveat. I’d add a temporal element to the INVESTCON scale. But I’ve gone on rambling long enough here for now and I am sure you’re starting to fall asleep. So I’ll have to pick this up later. But go give his letter a read. It’s a 10/10.

I hope you’re having a great week. And as always, stay disciplined!